This week we learned about uncertainty reasoning as the main topic.

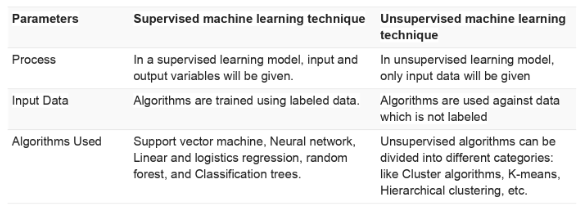

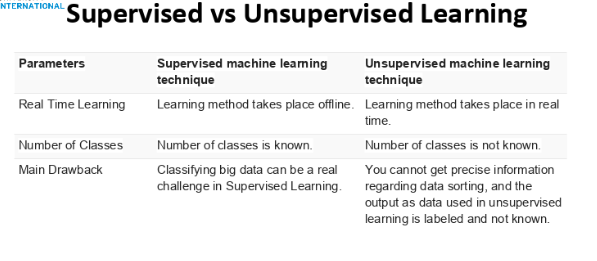

More specifically we learned about the 3 types of machine learning. supervised, unsupervised, and reinforcement learning and their definitions

More detail was put into the definition of supervised and unsupervised learning. we then relearned about discrete probabilistic calculations. unions, etc.

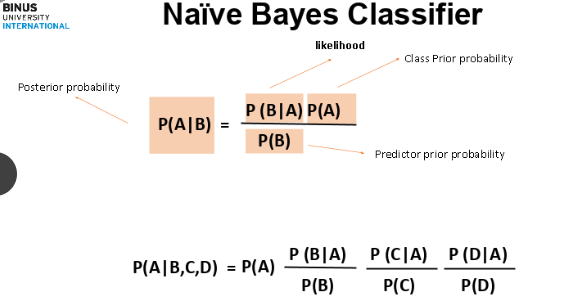

lastly we learned about naive bayes calculations